I have several Raspberry Pi 3 and 4 out in the field running Raspbian/Raspberry Pi OS/Debian 9/12/13, and like everything else have Chef running on them. These are 32-bit armv7l and 64-bit aarch64 platforms and installers normally aren’t built for them, so you gotta do it yourself. I’m not a fan of just spewing files or gems onto a filesystem, I want my stuff in proper Debian packages. mattray’s build instructions and parts of the DEB-chef-cinc.sh script have got me through the switchover to building Debian packages for Cinc 15/16/17 on my own, but I started having problems on Cinc 18.

I have practically no expertise with Ruby bundler and Omnibus so I’ve just been using whatever big hammer that works for now, so there are surely proper ways to fix my problems I haven’t spent time on yet. I do Cinc builds for these maybe twice a year, so for me it’s not worth the effort to make it perfect. These are my notes for what I’ve been doing that may help others and in case I lose my Raspberry Pi used for builds. Apologies for the clowntown.

omnibus-toolchain and libatomic.so.1

Omnibus-toolchain is an intermediate step to get working omnibus tools to start building the real Chef/Cinc installer. It’s installed as a Debian package to /opt/omnibus-toolchain/. This doesn’t have to be built every single time a new minor release of Chef comes out, but needs to be periodically rebuilt. Which is a good thing because on a RPi 3 this takes several hours to build as it builds ruby, openssl, and a ton of everything else. Building Chef isn’t nearly as bad but still takes a couple of hours.

In particular on Raspberry Pi 3 (armv7l) I had a problem with omnibus-toolchain and binaries being linked against libatomic.so.1 but that library not being bundled in:

[HealthCheck] E | 2025-08-30T17:48:15-05:00 | The following libraries have unsafe or unmet dependencies:

--> /opt/omnibus-toolchain/embedded/libexec/git-core/git-remote-http

--> /opt/omnibus-toolchain/embedded/libexec/git-core/git-http-fetch

--> /opt/omnibus-toolchain/embedded/libexec/git-core/git-http-push

--> /opt/omnibus-toolchain/embedded/libexec/git-core/git-imap-send

--> /opt/omnibus-toolchain/embedded/lib/ruby/3.1.0/armv7l-linux-eabihf/openssl.so

--> /opt/omnibus-toolchain/embedded/lib/libcrypto.so.3

--> /opt/omnibus-toolchain/embedded/lib/engines-3/padlock.so

--> /opt/omnibus-toolchain/embedded/lib/engines-3/afalg.so

--> /opt/omnibus-toolchain/embedded/lib/engines-3/capi.so

--> /opt/omnibus-toolchain/embedded/lib/engines-3/loader_attic.so

--> /opt/omnibus-toolchain/embedded/lib/libcurl.so.4.8.0

--> /opt/omnibus-toolchain/embedded/lib/ossl-modules/legacy.so

--> /opt/omnibus-toolchain/embedded/lib/libssl.so.3

[HealthCheck] E | 2025-08-30T17:48:15-05:00 | The following binaries have unsafe or unmet dependencies:

--> /opt/omnibus-toolchain/embedded/bin/openssl

--> /opt/omnibus-toolchain/embedded/bin/curl

[HealthCheck] E | 2025-08-30T17:48:15-05:00 | The following libraries cannot be guaranteed to be on target systems:

--> /usr/lib/arm-linux-gnueabihf/libatomic.so.1 (0x766e8000)

--> /usr/lib/arm-linux-gnueabihf/libatomic.so.1 (0x767fc000)

Apparently this is only a Raspberry Pi 3 problem as this library is addressing some shortcomings in the arm7 architecture.

After beating on Claude for a while what I wound up doing was creating a config file for libatomic within the git checkout of omnibus-software (omnibus-toolchain/config/software/libatomic.rb):

name "libatomic"

default_version "1.0"

license :project_license

build do

command "mkdir -p #{install_dir}/embedded/lib"

command "cp -L /usr/lib/arm-linux-gnueabihf/libatomic.so.1* #{install_dir}/embedded/lib/ || true"

command "cp -L /usr/lib/aarch64-linux-gnu/libatomic.so.1* #{install_dir}/embedded/lib/ || true"

end

and adding a dependency line to omnibus-toolchain/config/projects/omnibus-toolchain.rb:

... dependency "omnibus-toolchain" dependency "ruby-cleanup" # XXX: local fix dependency "libatomic" exclude '\.git*' exclude 'bundler\/git' ...

After nuking the caches under /var/cache/omnibus, starting another build, this finally got me a working omnibus-toolchain_3.0.41~20260117203037-1_armhf.deb package.

git 2.11 on Raspbian 9 (Stretch) is too old

The copy of Raspbian 9 I had on my Raspberry Pi 3s included git 2.11. Apparently it did not like doing a --depth 1 fetch and silently errored out.

Fetching https://github.com/chef/omnibus-software.git Git error: command git fetch --force --quiet /home/omnibus/omnibus-.... [omnibus@canary01 omnibus-software-2799d14cecc5]$ git fetch --force /home/omnibus/omnibus-toolchain/.bundle/ruby/3.1.0/cache/bundler/git/omnibus-software-338444b7bc50110d52bb35857ad8f946482db0ef --depth 1 2799d14cecc59c6a351c5cba3761c01fff9a65a7 [omnibus@canary01 omnibus-software-2799d14cecc5]$ echo $? 1 [omnibus@canary01 omnibus-software-2799d14cecc5]$ ls -l total 0 [omnibus@canary01 omnibus-software-2799d14cecc5]$

After building git 2.44 and installing it to /usr/local/bin/git, this fixed the problem. This also required installing libcurl4-openssl-dev, libcurl4-gnutls-dev, libexpat1-dev, gettext, and zlib1g-dev in order to have the git build to also make the required git-remote-https too.

license_scout can not detect licensing information

I was hitting this on both my 32-bit and 64-bit builds of Cinc 18, this seems like a new thing. Best I can remember this was trying to fetch a hardcoded URL for a license file on github which no longer existed and would require patching to fix?

[Builder: chef] I | 2023-10-26T07:46:42-05:00 | Finished build

[Licensing] W | 2023-10-26T07:46:47-05:00 | Can not automatically detect licensing information for 'chef' using license_scout. Error is: 'Network error

while fetching 'https://raw.githubusercontent.com/socketry/multipart-post/main/README.md'

404 Not Found'

Encountered error(s) with project's licensing information.

Failing the build because :fatal_licensing_warnings is set in the configuration.

Error(s):

Can not automatically detect licensing information for 'chef' using license_scout. Error is: 'Network error while fetching 'https://raw.githubusercontent.com/soc

ketry/multipart-post/main/README.md'

404 Not Found'

by now I was pretty frustrated and just wanted the damn thing to build. So I did this in the DEB-cinc-sh instructions:

bundle exec omnibus build cinc -l internal \ --override fatal_licensing_warnings:false \ --override fatal_transitive_dependency_licensing_warnings:false

I have no idea what this does, it probably means my packages are missing license files or something and shouldn’t be distributed.

cinc vs cinc-full source tarballs

In the Cinc source code repository, there are two types of source tarballs. One is named cinc-full-X.XX.XX.tar.xz and the other is cinc-X.XX.XX.tar.gz. The full- tarballs contain an additional omnibus-software directory and seem to only be for new minor version releases and not every point release (e.g. 18.1.0 vs 18.8.54). I really don’t know what the difference is and haven’t got around to asking. I’ve been successful building the cinc-full versions, such as 18.1.0, but was having problems with newer point releases. On 18.8.54 I tried copying the cinc-full-18.1.0/omnibus-software directory over and made the two directory trees look similar and if I remember right, my problems went away. So I’m guessing this is the omnibus build config for this particular minor tree and it’s good enough to build successive releases.

Other tips and notes

- You don’t have to build ruby every single time you run the DEB-cinc-sh script, I have it commented out and only do it when neccessary. For building Cinc 17 I needed to bump up from ruby 2.7.4 to 2.7.8, and then for Cinc 18 I needed to build again to get to Ruby 3.1.4 which would provide the required bundler 2.x.

- If you skip build steps such as Ruby, make sure you still source things like rbenv init - and add $HOME/.rbenv/bin: to your path. This makes sure you’re getting things like the new version of Ruby and bundler, not what’s on your system. If you’re skipping building omnibus-toolchain then make sure /opt/omnibus-toolchain/bin is in your PATH too, it also has its own version of bundle, ruby, and gem there.

e.g.

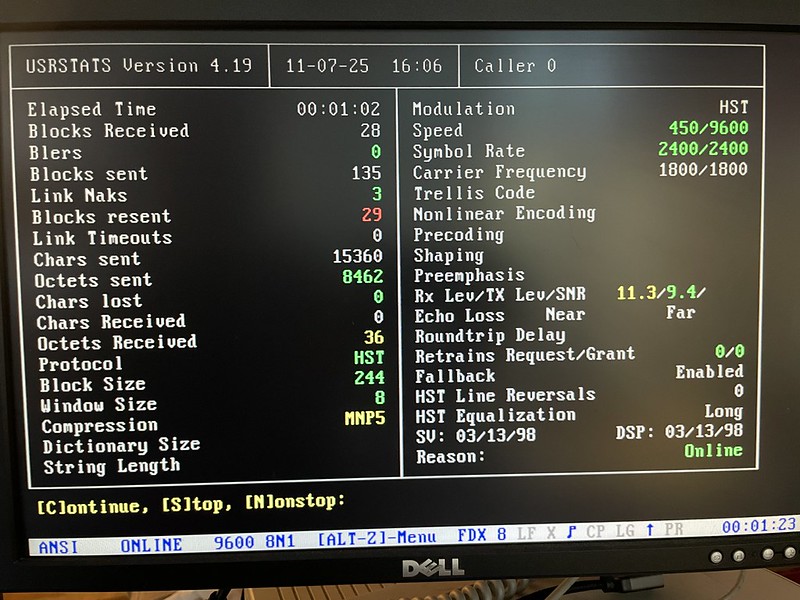

root@canary01:/home/omnibus# ./.rbenv/versions/3.1.4/bin/ruby --version ruby 3.1.4p223 (2023-03-30 revision 957bb7cb81) [armv7l-linux-eabihf] root@canary01:/home/omnibus# ./.rbenv/versions/3.1.4/bin/bundle --version Bundler version 1.16.0 root@canary01:/home/omnibus# /opt/omnibus-toolchain/embedded/bin/ruby --version ruby 3.1.6p260 (2024-05-29 revision a777087be6) [armv7l-linux-eabihf] root@canary01:/home/omnibus# /opt/omnibus-toolchain/embedded/bin/bundle --version Bundler version 2.3.27 [omnibus@canary01 omnibus]$ echo $PATH /opt/omnibus-toolchain/bin:/home/omnibus/.rbenv/shims:/home/omnibus/.rbenv/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/games:/usr/games

- When building all this your real Cinc files in /opt/cinc get used, trashed, and overwritten, so you really do need to have a working Cinc in place first and chown it to omnibus:omnibus so the build processes can use it as a build directory (surely this can be avoided). After you’re done building your new package you can install it on your system and it makes it all work again.

- When building Cinc 18.8.54 I hit an error saying the version of Ruby I had (it was sourcing /opt/cinc/embedded/ruby) wasn’t new enough for some ssl thing, so I had to build Cinc 18.1.0 first, install it, and then I could use it to build 18.8.54.

- Another occasional issue I had was during omnibus build of chef, it would complain like /opt/cinc/bin/chef-apply existed and it couldn’t handle this (despite having full ownership of the directory). When this would happen I’d just rm /opt/cinc/bin/*, re-run build and that let it go on its merry way.