I guess sometimes you have to challenge your assumptions and just try things. For years and years at home and and in Oklahoma I have been running Hurricane Electric’s Tunnelbroker tunnels to get IPv6 to my networks. At the time when I set them up, everything said the 6in4 tunnel (specifically IP protocol 41) wouldn’t work through NAT and would have to set up the local tunnel endpoint on the same router where you get the public IP address from your ISP. So that’s what I did, I used my own routers and configured my VDSL and cable modems to work in bridge mode so the real public IP address was directly on my router and my tunnel traffic wasn’t being NAT’d. This has worked great for years and years and I never gave it another thought, I never had to think about the config since.

I would discover recently that this assumption is not quite right, 6in4 tunnels can indeed work across a NAT, even double NAT.

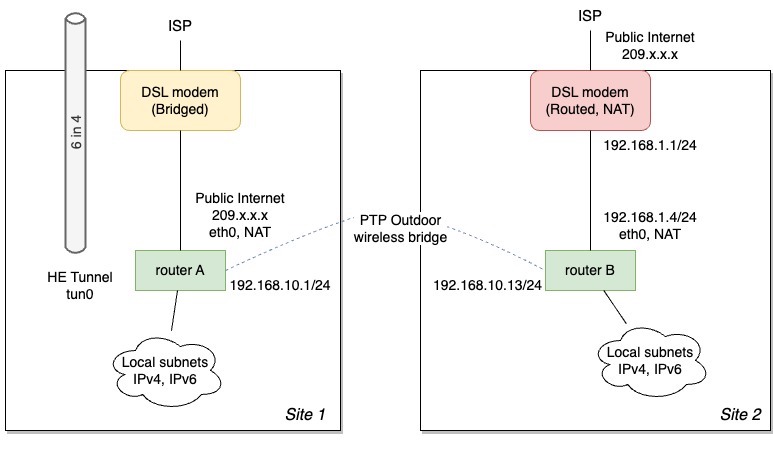

For various reasons I had two sites in Oklahoma, each with their own DSL connections to the same ISP, and interconnected with a wireless point to point bridge. At “Site 1” I got the ISP to configure their VDSL modem in bridge mode and I provided my own EdgeRouter. I configured PPPoE on my router and got the public IP address on my router. Here on this router I configured my Tunnelbroker tunnel, through it I funneled IPv6 subnets from both sites. Locally originated IPv4 traffic went out the local DSL connection.

Site 2 was a little more traditional, here I was using the ISP’s provided VDSL modem+router (Comtrend CT-5374) which acted as a NAT; public IP address on the WAN interface, 192.168.1.x/24 on the LAN side. Behind it was another one of my EdgeRouters that had an interface that connected back to site 1, and the local LANs attached to it. IPv4 things here going out to the Internet wound up being double NAT’d, first through my EdgeRouter, and then NAT’d again through the ISP router. Again locally originated IPv4 traffic went out the local DSL connection. Any v6 traffic was hauled over to Site 1 where the Tunnelbroker tunnel was.

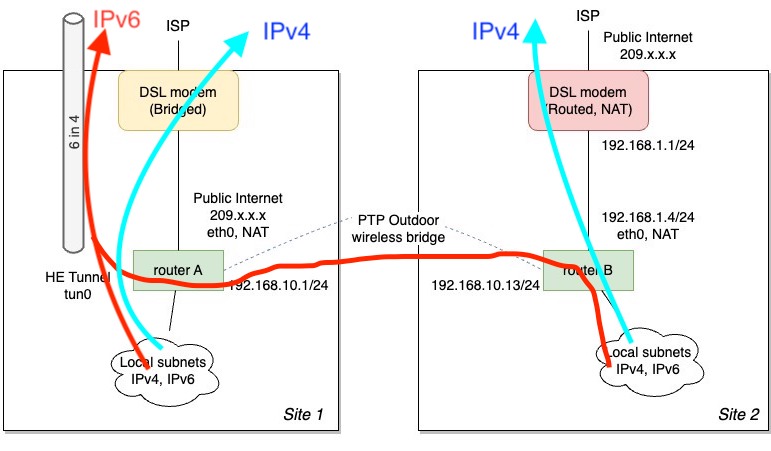

Local IPv4 traffic went out the local VDSL connection, IPv6 traffic went out the tunnel on router A:

Sadness and a surprise

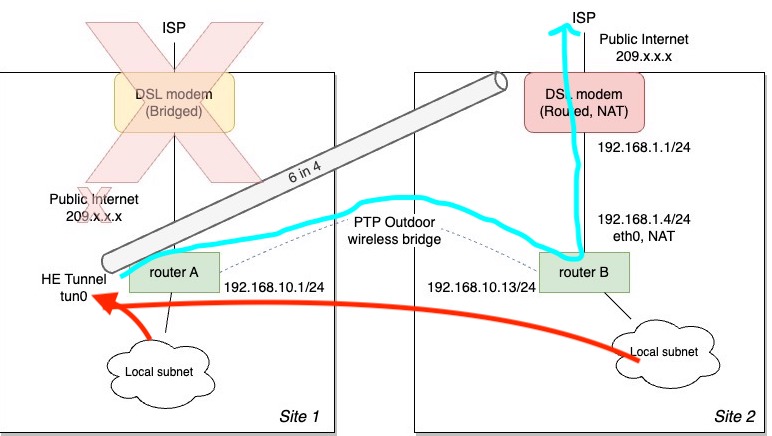

This all worked great for years until this summer the VDSL modem at Site 1 (in bridge mode) up and died. I had a spare modem but I couldn’t get it to work with the ISP. This broke my IPv6 connectivity because Site 1 no longer had any direct internet connectivity. This made me sad because I didn’t have another bridged modem and just (wrongly) assumed it wouldn’t work through the NAT at Site 2. I was gonna have to beg the ISP change the config for me, or do some janky port forwarding, gnashing of teeth, resort to setting up an IPsec tunnel, etc etc etc.

One day I was changing default routes on Router A at Site 1 to send all traffic to Router B at Site 2, so I’d at least have working IPv4 Internet access at Site 1. A few minutes later I started getting recovery alerts that all of my IPv6 hosts were reachable again. Huh?

Initially I thought the modem at Site 1 started working again, but checking again it was in fact still dead and everything was now running through Site 2. I thought about it for a minute, why wouldn’t IP protocol 41 work through a NAT? And for that matter, through double NAT? We can translate ICMP (IP protocol 1) through it just fine, and from an IP perspective, it’s just another protocol number.

I got to re-reading all of the forums discussing the setup of Tunnelbroker connections and noticed none of them really outright say “this won’t work through NAT”. Instead it was always strongly implied it might or might not depending on the vendor’s NAT implementation, “it’s best if you do this instead”, caveat emptor, you’re on your own, etc etc. So if your NAT was really just doing only UDP/TCP PATs, or had something obnoxious that specifically blocked IP protocol 41 (I hear AT&T used to do this for “security”), or didn’t have full mapping from the public address like with CGNAT, this wouldn’t work. But from a pure NAT standpoint on paper, it would work fine.

Normally I’d have packet captures to prove to myself how it works, but in this situation I got lazy because there’s a lot of v6 traffic going in and out and it’s annoying to sort it out right now. I got to looking and there was no special IP protocol 41 NAT config on the ISP’s router (I cheated and have access on it) nor on my EdgeRouter. More annoyingly the ISP’s router doesn’t provide any way to dump the full NAT translation table to prove my situation, just a list of the PATs which doesn’t show non UDP/TCP things.

I would assume that from a first-power-on sequence, there would have to be one outbound v6 packet to get the two NAT translations set up. After that, both routers know how to forward packets to/from the Tunnelbroker. It’s worth noting that the other direction works too, Internet -> inside connections work fine too, I can SSH directly to my v6 hosts from the v6 Internet. In my particular setup I have a script that tries to ping a host on the Internet to check for v6 reachability before trying to send a command to the tunnel API to update the endpoint address, so this seems to fill the first packet checkbox.

For egress IPv6 traffic, the path looks something like this:

Packet leaves host -> hits v6 gateway on router A -> v6 packet goes to tunnel tun0 interface where it’s encapsulated as 6in4. 6in4 packet is destine to the Tunnelbroker server as an ordinary IPv4 packet sourced from 192.168.10.1. Gets routed over wireless bridge to router B, NAT’d to 192.168.1.4, hits the ISP’s router, where it’s NAT’d once again to the public IP address, and forwarded out to the Internet.

The return reply comes in on the ISP’s router where the NAT table says it came from 192.168.1.4. It gets forwarded to the EdgeRouter which in turn resolves the NAT and sends it to 192.168.10.1. Once it’s back on router A, it goes through the tun0 interface, and out pops a IPv6 packet.

NAT translation on router B, 216.66.77.230 is the Tunnelbroker server, “unknown” protocol:

$ show nat translations source address 192.168.10.2 Pre-NAT src Pre-NAT dst Post-NAT src Post-NAT dst 192.168.10.2 216.66.77.230 192.168.1.4 216.66.77.230 unknown: snat: 192.168.10.2 ==> 192.168.1.4 timeout: 599 use: 1

TIL.

This has some interesting implications had I figured it out sooner. One problem I’ve always dealt with on EdgeRouters is that the 6in4 encapsulation happens on the general purpose CPU and is not hardware accelerated. As such I was always bottlenecked on my Internet IPv6 throughput using a tunnel. My solution was to buy a faster EdgeRouter and then eventually come up with an IPv6 policy based routing setup where I could use native (read: faster) Comcast IPv6 for day to day stuff while keeping my servers on the HE IPv6. Had I known what I know now, I could’ve moved my HE tunnel to a Linux box to offload the work of processing 6in4 encapsulation and saved a bunch of (admittedly interesting) work arounds.

I need to get around to moving the HE tunnel from router A to router B in Oklahoma so I can finally deprecate Site 1, but that’s a project for a future time.

Update 28-Dec-2023:

While in Oklahoma I did some fiddling with the EdgeRouter and found some shortcomings. I had been running version 1.10.10 of their software and attempted to update to 2.0.9 on router B. When the router came back up, the tunnel was broken. Also doing things like moving the tunnel between router A and B necessitated a reboot of B, so there’s some sort of state I’m losing. (Keep in mind my external public IP address doesn’t ever change during all this because it’s on the ISP’s CPE router)

What was interesting after upgrading to 2.0.9 on router B was that running tcpdump on the interface facing the ISP router and lots of IP protocol 41 packets were very clearly coming in from the Tunnelbroker server to my inside NAT address on router B. But, they weren’t being forwarded to router A which was configured with the tunnel. As far as I can tell they were just being blackholed. Likewise, running tcpdump on my tunnel router A, IP protocol 41 packets were being originated and destine for the Tunnelbroker server on the Internet, but also weren’t being passed on beyond router B. This seems to imply some sort of implicit NAT translation on router B was missing after the 2.0.9 upgrade to let them flow back and forth. I tried a number of static destination and even source NAT rules on router B which looked like they should shoehorn inbound traffic to the tunnel router A and the return traffic, but nothing seemed to do the trick.

I reverted back to 1.10.10 with the exact same original configuration (that had no special protocol 41 NATs). If I recall correctly, the tunnel wasn’t working at all, and then suddenly on its own 5-10 minutes later it spontaneously started working and pings to a v6 Internet host worked. I didn’t capture that bit in tcpdump, so I’m not sure what went by to knock it loose.

There were some times when I’d reboot that the tunnel wouldn’t work, I’d give up and reboot it again. Then it would work on the next boot. The last thing I did was to move the tunnel endpoint over to router B to consolidate and simplify things. I would’ve expected the tunnel to just start working again after the move. Inbound protocol 41 traffic was still coming in from the Internet, and I was generating response traffic, but it was still being blackholed again on router B. After a reboot, IPv6 traffic to/from the Internet started working again. I don’t know what the deal was, maybe a stale IP route cache or a stale translation?

I ran out of time to dig deeper to find a more respectable root cause. I’ll have to try the 2.0.9 upgrade again on the next trip to see if I can get it to stick, or find out if there really was a change that broke it.