Stop doing this!

The Anaconda 19 (now v21) installer in RHEL 7 / CentOS 7 is a great improvement over Anaconda 13 that was used in CentOS 6. Among other fixes it was completely overhauled along the way. One thing lacking in CentOS 6 was the ability to perform an automatic kickstart installation over an IPv6-only network. Some bits within the kickstart configuration may have been fetched over v6 but the whole process from kernel boot to completion needed to be dual-stacked. For example, the ipv6 kernel module wasn’t loaded before Anaconda’s loader tried to download install.img, so it failed on a v6-only network. However later in stage2 we could do things like download from v6 package repos.

Installing completely over v6 is now possible in CentOS 7 although it takes some tweaks to be reliable in the datacenter.

A few assumptions and preconditions for this post:

- Servers need static IP addresses, for DNS mapping and service discovery. SLAAC is out because if the NIC is swapped out for a repair, the address changes.

- During PXE boot a server is given its IP address from a DHCPv6 server. This could be a pool or static reservations. I prefer the latter.

- Depending on the host to get a IPv6 default gateway from the LAN from the router (or top-of-rack L3 switch).

- Fetching the kernel and initrd over TFTP or HTTP. I install packages over HTTP, but you’re an NFS shop and it supports v6 it should work.

- Most of this is applicable for Fedora too for things later than v13 and of course RHEL7.

- Your package repos, nameservers, kickstart server and other resources used during kickstart are accessible over v6, either singly or dual-stacked.

- Only access to the system is serial console and SSH only, as that’s what real datacenters have. No graphical UI, no VNC, no KVM, no VGA.

The main problems I encountered with Dracut and Anaconda doing IPv6-only installs was due to race conditions of bringing up the NIC and proceeding to download things before it had full routing. It can take a second or two for IPv6 neighbor discovery protocol to do its thing, do duplicate address detection (DAD), and learn the v6 gateway from a router advertisement. This would frequently cause fetching the kickstart configuration to fail, cause package repos to be marked as unusable, or die mid-installation.

Fortunately there are mainly four key problems to watch out for and they’re easily hacked around with some simple shell script. Once they’re addressed you’ll have no problem installing CentOS over a IPv6-only network. It does involve rebuilding both the initrd ramdisk and stage2 squashfs image until things are fixed upstream.

The even better news is that at least two of the fixes for this have been either accepted or merged upstream so hopefully this post will eventually be obsolete!

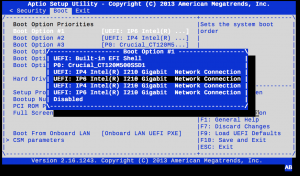

Kernel and initrd

The PXE specification itself is IPv4 only. The usual PXELINUX typically used for kickstart isn’t an option here, even newer versions with the lwip stack, because it’s still v4-only. To download the kernel and initial RAMdisk over a v6 network you’re left with things like UEFI IPv6 PXE (which not many boards support yet), iPXE, chain-loading v4 PXE -> v6 iPXE or better yet, GRUB2 with recently added IPv6 support. Hopefully in the future I’ll post more about the options here.

Whichever network boot program you use, in CentOS 7 the kernel command line options you’d normally give to the NBP to pass on to the stage1 ramdisk have changed syntax a bit and some options have been deprecated since CentOS 6.

For example, if you want to statically configure an IP address, prefix, and name servers on the kernel command line, they’d look something like this:

This tells dracut to boot with a static IP address, a /64 prefix, disable any DHCPv4/DHCPv6 requests, no SLAAC, and use the two nameservers for resolving hostnames, respectively, and the location of the kickstart configuration. It also disables the new persistent Ethernet naming scheme. I personally don’t like having NICs named “enp0s1”, “eth0” is fine and easier to script with. Also “inst.ssh” enables SSH in Anaconda so you can ssh to the system while it’s installing.

This command line config is only used during installation and is completely independent to the network configuration you specify in the Anaconda kickstart config file which gets configured to the target system.

I strongly prefer performing installations with a static IP address (e.g. dhcp with a reservation). It’s very handy when you have processes on a build server that want to log in to interrogate the build process, if you need to SSH in to see why things broke, or to correlate things like logfiles.

Stage1 (initrd.img) and downloads

The kernel and initrd get fetched over the network and loaded into memory. Systemd kicks off Dracut which does a bunch of prerequisite steps for preparing to start the installer. Because we’re doing a network install, Dracut initializes the NIC and begins downloading the kickstart and stage2 image which contains the Anaconda installer … or so you think.

Gotcha #1: In reality what happens is the NIC gets configured with an IPv6 address and because the NIC is now considered “online” Dracut scripts proceeds immediately to download the kickstart config via the hook script 11-fetch-kickstart-net.sh. The problem is we very likely haven’t had time to do DAD or discover our v6 default gateway. This causes Dracut to fail and eventually drop to an emergency shell. The telltale sign of this problem is the “Network is unreachable” error from curl:

dracut-initqueue[1219]: curl: (7) Failed to connect to 2401:beef:20::20: Network is unreachable

dracut-initqueue[1219]: Warning: failed to fetch kickstart from http://2401::beef:20::20/ks.cfg

My fix for this has to be add a Dracut hook script named 10-network-sleep-fix.sh into the initrd that does nothing but literally sleep 4 seconds. I also print out the default gateway as a debugging aid.

/usr/lib/dracut/hooks/initqueue/online/10-network-sleep-fix.sh:

#!/bin/bash

# 10-network-sleep-fix.sh

#

# Goes in /usr/lib/dracut/hooks/initqueue/online/10-network-sleep-fix.sh

# Sleep four seconds after bringing up NIC to give time to get a v6 gateway

# Print to both stdout (for journal) and stderr (for console)

echo "*****" 1>&2

echo 1>&2

echo "****: hack: sleeping 4 seconds to ensure network is usable before" \

"fetching kickstart+stage2" 1>&2

sleep 4

echo "**** Our v6 default gateway is: $(ip -6 route show | grep default)" 1>&2

echo "****" 1>&2

echo "****" 1>&2

This will cause the Dracut hooks to pause long enough to get us a default gateway. From my experience < 4 seconds was too short; four seems to be the sweet spot between constant success and not excessively stringing out the boot process.

Upstream bug report: https://bugzilla.redhat.com/show_bug.cgi?id=1292623

Anaconda / stage2 (squashfs.img)

Ok! Dracut has downloaded our LiveOS stage2 image (e.g. os/x86_64/LiveOS/squashfs.img) which contains all of the Anaconda installer code and performed a pivot-root over to it. Here’s an example of a couple of directives in a kickstart configuration to statically define our IPv6 addresses:

timezone America/Los_Angeles --isUtc --ntpservers=2401:beef:20::a123,2401:beef:20::b123

network --hostname=newbox.wann.net --bootproto=static --ipv6=2001:40:8022:1::11 \

--nameserver=2401:beef:20::a53,2001:beef:20::b53 --activate

If you have a need to install a dual-stacked server with v4 and v6, you can still specify –ip, –netmask, and –gateway options in addition to the v6 options.

The new systemd instance in stage2 will start up a nice tmux session (if you’re on console) and start Anaconda. Anaconda will then start up NetworkManager to re-initialize our NICs to take control for purposes of the install.

Here’s where we’ll hit a few more problems that’ll cause us to fail.

Gotcha #2: Dracut will write out its network configuration to /etc/sysconfig/network-scripts/ifcfg-eth0 with an empty “IPADDR=” line and “BOOTPROTO=static“. This causes NetworkManager to think there’s a v4 configuration to import as well as to treat our currently “UP” NIC as another connection. Rather than use the existing UUID from ifcfg-eth0, NetworkManager creates a second connection with a new UUID. Anaconda then dies because it can’t find the original UUID.

This throws an Anaconda “SettingsNotFoundError” traceback that looks something like this:

Traceback (most recent call first):

File "/usr/lib64/python2.7/site-packages/pyanaconda/nm.py", line 707, in nm_activate_device_connection

raise SettingsNotFoundError(con_uuid)

File "/usr/lib64/python2.7/site-packages/pyanaconda/network.py", line 1209, in apply_kickstart

nm.nm_activate_device_connection(dev_name, con_uuid)

...

SettingsNotFoundError: SettingsNotFoundError('5cce9753-76ff-1f2e-8e09-918a15d4229d',)

Fortunately this has been fixed upstream in NetworkManager 1.0, but this hasn’t been backported to CentOS 7 yet: https://mail.gnome.org/archives/networkmanager-list/2015-October/msg00015.html

Until that’s done, the fix here is to create a really simple systemd service that executes before Anaconda loads (“anaconda.target”) that seds out the BOOTPROTO line. Drop these two files into the stage2 image

fix-ipv6.service:

# Goes into /usr/lib/systemd/system/fix-ipv6.service and

# /etc/systemd/system/basic.target.wants/fix-ipv6.service is a symlink

# to this script.

#

[Unit]

Description=IPv6-only ifcfg-eth0 hack

Before=NetworkManager.service

[Service]

Type=oneshot

ExecStart=/etc/sysconfig/network-scripts/fix-ipv6-only.sh

fix-ipv6-only.sh:

#!/bin/bash

#

# Goes into /etc/sysconfig/network-scripts/fix-ipv6-only.sh

#

# If we have no IPADDR= set (v6-only), remove BOOTPROTO so

# NetworkManager will parse the config file correctly

#

if [[ $(grep ^IPADDR=$ /etc/sysconfig/network-scripts/ifcfg-eth0) ]]; then

echo "XXX hack: no IPv4 addr set (IPADDR=) in ifcfg-eth0, fixing for v6-only"

sed -i '/BOOTPROTO=.*/d' /etc/sysconfig/network-scripts/ifcfg-eth0

fi

Now Anaconda and NetworkManager can properly find the NICs to try to begin the installation. Between %pre scripts and package downloads will be areas with the final two gotchas to hack around.

Gotcha #3: NetworkManager will reinitialize our NIC and cause us to lose our v6 default gateway momentarily. This causes a race condition because while NM is finishing bringing up the NIC to a fully CONNECTED_GLOBAL state, Anaconda immediately starts trying to download package repo metadata (“.treeinfo“). If the package repos are not on the same LAN as the host you’re installing, you will likely fail here. Because .treeinfo fails to download, Anaconda will mark the repository as unusable. This results in a “software selection failure” on console.

3) [!] Software selection (Installation source not set up)

4) [!] Installation source (Error setting up software source)

There’s not a perfect fix here as it becomes tricky to know what connected state we need to be in before proceeding. A good compromise has been to add retry logic to the .treeinfo portion of Anaconda. There’s already retry code in Anaconda for downloading individual packages. I replicated this within packaging/__init__.py to handle retrying .treeinfo until we have working routing.

This is enough to start getting kickstart to execute post scripts and maybe install a few packages but we’re not out of the woods yet.

Upstream bug report: https://bugzilla.redhat.com/show_bug.cgi?id=1292613. My patch was accepted but hasn’t been merged in yet.

Gotcha #4: NetworkManager doesn’t support both static IPv6 addressing and dynamic route selection. Particularly if the Anaconda installer environment is running with a static v6 address and no v6 gateway is specified on the kernel command line, NetworkManager sets the sysctls “net.ipv6.conf.eth0.accept_ra” and “accept_ra_defrtr” to 0. This slams the door shut on learning a gateway via router advertisements. If a default gateway was learned prior to these sysctls being disabled, things like package downloads may work for a short period of time until the TTL expires or it gets flushed.

The work around for this is a flat out hack. At the top of my common %pre script I have a background while loop that does nothing but set these values to 1 over and over again. This makes up for the shortcoming in NetworkManager and immediately re-sets accept_ra and accept_ra_defrtr sysctls to enable learning a gateway via router advertisement. It looks something like this:

%pre

...

(

for i in {1..300}; do

date

sysctl -w net.ipv6.conf.eth0.accept_ra=1

sysctl -w net.ipv6.conf.eth0.accept_ra_defrtr=1

sleep 1

done

) > /tmp/networkmanager-hack.log 2>&1 &

...

This will run in the background for five minutes, allowing for any lengthy pre-script operations to happen (e.g. RAID setup) in the interim. It redirects all of its output to a log file so it doesn’t pollute preinstall.log

Upstream bug report: https://bugzilla.gnome.org/show_bug.cgi?id=747814

Fin

And that’s it. With these four fixes you can completely install CentOS 7 over an IPv6-only network. I’ve submitted bug reports upstream and have been working to get these issues resolved so people in the future can install over v6 out of the box.

Addendum: TL;DR for rebuilding initrd and squashfs.img

initrd.img

This image is usually a gzip- or xz-compressed archive. Uncompress it and extract it to a directory with cpio. Rebuilding it is a matter of rebuilding the archive with cpio and re-compressing it with gzip or xz.

# cp $somewhere/initrd.img /tmp ; cd /tmp

# mkdir init.fs ; cd init.fs

## Extracts contents of initrd.img to init.fs directory

# xz -dc ../initrd.img | cpio -vid

OR

# gzip -dc ../initrd.img | cpio -vid

## hack hack hack

# find . | cpio -o -H newc | gzip -9 > ../initrd-new.img

Pro tip: you don’t have to keep the initrd.img name, you can call it whatever you want. If you make changes that are different than the image distributed by upstream, this is a good idea. Just remember to update the filename in your PXE or GRUB configuration used for kickstart.

squashfs.img

The LiveOS squashfs image is a squash filesystem with an ext4 sparse image inside it. This means you can’t just mount the squashfs.img, make modifications and unmount it. You must mount it, make a copy of the rootfs.img within, make changes to the copied rootfs.img and create a new squashfs image.

# cp $somewhere/os/x86_64/LiveOS/squashfs.img /tmp ; cd /tmp

# mkdir rootfs-img squashfs-img LiveOS

# mount -o loop squashfs.img squashfs-img

# cp squashfs-img/LiveOS/rootfs.img .

# mount -o loop rootfs.img rootfs-img

# cd rootfs-img

## hack hack hack

# cd /tmp

# umount rootfs-img

# cp rootfs.img LiveOS/rootfs.img

# mksquashfs LiveOS squashfs-new.img -comp xz -keep-as-directory

Pro tip: again you can keep your modified squashfs.img in a separate location than the one that came with the distribution. The twist here is that your squashfs.img must be in a subdirectory named LiveOS. This directory can live wherever you want, e.g. http://buildserver/centos/7/LOLCATS/LiveOS/squashfs.img. On the kernel command line for PXE or GRUB, you’ll need to specify the inst.stage2 directive pointing at the directory that contains LiveOS, e.g. inst.stage2=http://buildserver/centos/7/LOLCATS/.

In practice I keep each new squashfs image in a directory named with a release number such as “/7.x/7.2r5/LiveOS” so I can make changes to incremental changes to Anaconda and keep them organized.

Tags: anaconda, ipv6