The always self-sufficient side in me has always been fascinated by wilderness medicine, how do you care for somebody who’s hurt/sick when you’re hours or days away from care? How do you improvise what you need to treat that person and make it possible to move them? When I was growing up in a rural area, we didn’t have 911, and a sheriff or ER could easily be 30+ minutes away; that was even after you got back to somewhere you could call/send somebody for help. This is part of what taught me it’s important to be able to take care of yourself.

Today I frequently go out on my own on roadtrips or hikes that put me hours into the middle of nowhere, could be west Texas, the Nevada desert, or the side of some mountain in Washington. Very often there’s no cell service, and while I have an amateur radio license and access to a transceiver that could reach dozens of miles out, it’s only useful if I know the area repeaters and if there are people listening that could help.

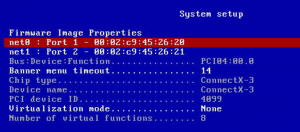

I learned about the NOLS Wilderness First Responder course years ago and it seemed very interesting because it went way beyond patching boo-boos and doing CPR, things taught in a basic first aid class. It covered all sorts of illnesses and problems that could happen in the backcountry, broken bones, wound management, head+spinal injuries and more. Further, it wasn’t just a textbook work, it was a hands-on course to practice doing these things. Unfortunately it was 10 days long and I never had (or put aside) the time to go to travel and take the course.

After my recent work sabbatical I dusted off the NOLS website and signed up for the WFR course here in California. One thing that bothered me from the course outline was having to pretend being a patient and using moulage/fake makeup. I totally understand and get how it’s necessary for the experience (more so now after actually doing it), but I was pretty meh about it up front. Even as a kid I never was a fan of make believe, costumes, or Halloween.

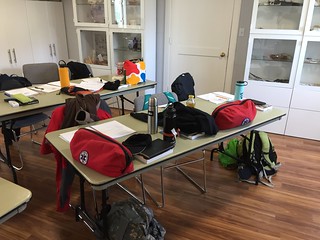

The class in San Francisco booked quickly and I signed up for the one in Sausalito. This was a long haul to make every day across the bay from Fremont, so I got a cheap motel in Mills Valley. The classroom was actually at Point Bonita YMCA, right on the coast near Golden Gate in the Marin headlands. Being August (Fogust) I expected it to be cold and foggy, and turns out it was the whole time. The weather upped the realism because we were frequently wearing multiple layers, snug jackets, and big coats, things we’d normally wear in cool environments, all which had to be dealt with during scenarios. Outside of the fog and drizzle, we had the Golden Gate Bridge and the Pacific Ocean as our backdrop to our outdoor classes. You couldn’t ask for a better setting around here.

Our instructors were Ryland and Sheri, and there were around 23 (?) people in the class. They all had varied backgrounds, such youth group leaders, park personnel, guides, and a few of us engineers who like the outdoors; all were mainly doing it for personal enrichment, some as a job requirement.

After some initial classroom time on the first day, we headed outside for our first scenario. If memory serves correctly it was how to size up the scene, approach the patient, and go through the ABCs. Another scenario worked up to head-to-toe assessments, getting vitals, and SAMPLE history. I had read a good hunk of the textbook in the months leading up to the class, but actually doing my first assessment on a real person I went into full on dummy mode and forgot everything I had read.

Each day was a combination of going over a topic in the classroom or outside in a group huddle, and going through 2-3 scenarios. For scenarios a group of students would be selected to be patients, go outside and be briefed on a story of their injuries and symptoms, then go lie/sit down somewhere and wait to be rescued. A few dabs of moulage makeup was usually used in each scenario to simulate bruising of a limb from a sprain/fracture, landing on the back, cyanotic lips, infection, rashes, and/or abrasions on faces or hands; then concealed under clothing for the rescuer to discover. Nothing outrageously gory at all, just something to help visualize the signs.

Rescuers would remain in the classroom until they were told it was time to go, given a very short summary on what happened (e.g. friend was slack lining and fell, somebody wrecked their bike, or sometimes “you just found this person”), and sent out to find the patients. At first we usually paired up two rescuers to a patient, but over time we also did a lot of solo rescues, and then got into full teams of rescuers. (Many of our mock scenarios happened at Yosemite, so watch out for that place. We also had a few oddly freak skydiving accidents for varieties sake resulting only in broken fingers, what a weird sport.)

Each time we would size up the scene, approach the patient and ask if we could help, go through our ABCDEs to check airway, breathing, circulation/bleeding, decide if we needed to stabilize their neck/spine, expose the injuries. Next we did a full, thorough head-to-to exam looking for any injuries/pain/tender areas, got a set of vital signs, a SAMPLE history, more vitals to find trends, and dug into any interesting points. Depending on the injury we’d try to do treat or immobilize the patient, decide if they needed evacuated, and come up with a verbal SOAP note to present. The SOAP was a special format of report we’d call into a search and rescue group, other rescuers, or otherwise higher level of care. (S = Subjective, information from the patient, “what could be lied about”, OPQRST details; O = Objective, facts from vital signs and observations, “the truths and facts”; A = assessment, what we thought was going on, what we did; P = plan for the patient, including long-term [e.g. overnight care]).

After each scenario we’d get together both as patient+rescuer(s) and the class as a group, go over what went well, what we missed, go over questions, and practice presenting a SOAP note to the group based on what we just went through.

We learned how to safely move people even if we were by ourselves. Once I saw where to grab and how to pull, it was no problem to move even my bigger classmates. If we suspected there was a possibility for a head/neck/spinal injury we’d control their head until we could check them out further. We frequently practiced rolling patients onto their sides for examinations, putting them on pads, putting them into recovery position in case they were to vomit or we had to leave them for help. Later we got into learning how to pack them in various litters for carrying them out as a team.

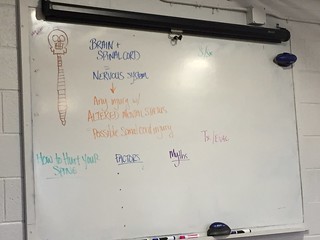

We learned how to do focused spinal exams to look for any injury/tenderness to the spine, check for things like tingling/numbness, sensation/motion in limbs, and if nothing negative was found, cleared to discontinue holding the head and letting the patient move around. I found out this is something specific to wilderness medicine protocols, an urban EMT wouldn’t do this.

We learned how to do focused spinal exams to look for any injury/tenderness to the spine, check for things like tingling/numbness, sensation/motion in limbs, and if nothing negative was found, cleared to discontinue holding the head and letting the patient move around. I found out this is something specific to wilderness medicine protocols, an urban EMT wouldn’t do this.

Even when I was acting as a patient I found the scenarios very useful. For instance if in a scenario I was afflicted with a high altitude cerebral edema (HACE), I became much familiar with the signs and symptoms associated with HACE because I would pretend to be irritated, say I had a pounding headache, didn’t sleep well last night, pretended to be nauseous, and had ataxia which made it hard to walk.

As time went on I got more comfortable acting as a patient. Some classmates would really get into it and ad lib some awesome reaction, pains, fake vomiting, or combativeness. It was a tremendous help in the learning process because we had to react to what was happening, such as rolling them on their side immediately if they thought they were going to vomit or choke, then get back into our assessments. Based upon how we found them we may had to change up our priorities. There were often a number of symptoms and things to remember, sometimes I’d forget a key thing to present while they did their assessment so it’d made me feel bad that I didn’t give my rescuers a full shake down.

In the classroom we covered a lot of topics over the ten days. For each topic we dived in a few hours, went over what was happening in the body, what signs and symptoms a given injury or illness would present, how to tell if they were minor or severe, treatment options, whether to evacuate or immediately evacuate the patient to get to higher level care. I found it most useful to write down everything onto my workbook during class, even if it was exactly what was already on the same page, because it made it sink into my head better. Ryland and Sheri would take turns talking about a topic, and they did a great job of teaching about it. They added in personal experiences, answered a ton of questions, and throwing in copious amounts of humor to make it very engaging. Doing scenarios after class time really tied it all together and made it real.

Later into the week we got into larger scale rescues where 4-6+ people worked as a team, either on a solo patient or a group of patients. It was a bit chaotic at first as people settled into who would do what, keeping the leader out of the direct action so they could lead, and deciding on treatments.

Rodeo Beach

One memorable mass casualty scenario was a “beach rescue” where we had multiple patients on the beach (on the actual Pacific Ocean at Rodeo Beach), and multiple rescuers. The moulage was kicked up considerably with all sorts of brutal injuries, patients were screaming and running around, which made it all very real. (Bystanders on the beach had to be told this was a training exercise.)

A few students were designated as incident commander, assistant, and gear keeper, and to organizing the rest of the rescuers. As an added twist a few of our classmates selected as patients also spoke Spanish, so they decided to throw us a curve ball by only speaking Spanish during the rescue. This was a considerable difficulty with my very limited Spanish, fortunately my partner could speak Spanish and she took on communicating with our patient. There were a few other students who could also speak Spanish, so our incident commander also took care of organizing them and sending them where their language skills were needed. Between getting our patients out of the surf, watching the tide, keeping them and ourselves dry and warm, assessments and treatments, frequently communicating our patients status to the incident commander, requesting gear, re-prioritizing who gets evacuated, and translating the Spanish there was a lot going on!

Another large scale rescue happened at night. We were divided into groups and sent out to different points of the park. We didn’t know when/who/where our patient would be or what we’d find, but it became quickly apparent as things unfolded. We took our day packs with us and had to treat our patient with whatever gear we carried, so there was a good amount of dumping packs and improvisation going on. A few concerned bystanders wandered by on the trail during all of this as our patient was screaming in mock pain so I had to give them a thumbs up that we had it under control. As part of the story we were lost and had to wait until 9:30 PM or so for somebody to find us. It was cold, windy, and dark, this was to teach us what it’s like to be with a patient for an extended amount of time and tend to them long term. While we debriefed afterwards in the nighttime I learned during a tib/fib break I did a poor job by only deciding to splint the lower part of the leg below the knee of our patient instead of the entire leg. The disappointment of our instructor upon inspection was palpable because it wouldn’t have worked. The next morning we got together again, dumped all of our gear and tried to make a better leg splint out of empty packs. By using three empty backpacks and some rope, it worked out a lot better than the night before. By far it was all certainly a learning experience!

Leg split made of backpacks

As a class we did a pretty good job of bonding with each other. We learned how to help each other, divide up the workload of patient care, and give feedback to each other on what we could do better. By the end we had taken so many vital signs and did head-to-toe exams on each other, I think we knew each other better than our own doctors did. I think we wound up going through at least 20 scenarios including the two big team exercises.

For the end of the course we took both a written test and did a practical exam. The practical exam was a relatively simple dual-rescuer, single patient scenario where there were a couple of things going on with the patient to treat and manage. After all of the scenarios I had been through I was pretty confident about it, but there were a set of exam criteria that we had to get right or we’d have to re-test which made me nervous. My partner and I passed, hooray!

I would wholeheartedly recommend this course to anyone who ventures outside. I had a great time and feel like I learned quite a lot. Reading the textbook was one thing, but it was a whole ‘nother experience to constantly practice scenarios, examinations, and making mistakes. The NOLS website also has a good set of case studies, test question banks, and videos to review. I’ve learned to love how versatile triangle bandages and Ace elastic bandages are, I will always carry those in my packs!

It was stressed that our skills would deteriorate over time, and we were urged to frequently practice doing patient assessments, consider volunteering our time to an organization, search and rescue team, fire department, or even just taking vitals at a health fair to help keep our skills up to date.

I don’t know when or where these skills will come in handy. I feel much more confident in walking up on something unknown, and even if I can’t do much of anything, at least assess the situation and get information to other rescuers.

Tags: wfr, wilderness medicine

I figured a herd of cattle with bells on around my campsite would scare anything else off (or at least be a tasty prey), so I was finally able to fall asleep relieved. In the morning they were up grazing around my tent so I got to watch them as I was laying there. A few were curious but ran off as soon as they saw me move. [Cowbell video]

I figured a herd of cattle with bells on around my campsite would scare anything else off (or at least be a tasty prey), so I was finally able to fall asleep relieved. In the morning they were up grazing around my tent so I got to watch them as I was laying there. A few were curious but ran off as soon as they saw me move. [Cowbell video]